35mm is an established standard for general photography. It was natural for Apple to target roughly that focal length in 2007, but since then the iPhone camera has mostly featured even wider lenses. Phone thickness limited focal length, and perhaps wide lenses are favored by customers as well. Then came iPhone 7 Plus. The dual camera system has significant implications for the iPhone as a photographic tool. I was not sampled an iPhone 7 Plus for this article. Comparison photos were taken using a camera set to 28mm and 56mm equivalent focal lengths.

Figure 1

iPhone 7 Plus dual lens promotional image. View on Apple's website here.

Focal Length

Cameras are defined by physical constraints. The lens must gather light from the world and project it on to a sensor. Every lens has a field of view which indicates whether it can see a wide sweeping vista all at once. Or works more like binoculars, picking out a small area. This lens principal is expressed in millimeters (mm). Higher numbers being a narrower view and lower numbers a wider view.

Apple's last camera on the iPhone 6S was a 29mm ƒ/2.2 unit. For the iPhone 7 Plus, they have gone both wider and narrower. The wide lens is 28mm ƒ/1.8 while the telephoto lens is 56mm ƒ/2.8. iOS 10 implements the telephoto lens as an "optical zoom" and uses a 2x button to change cameras. This might give the impression that the baseline photo mode is 1x, but that isn't correct. They are both baseline photo modes, useful for different things, and each is very capable.

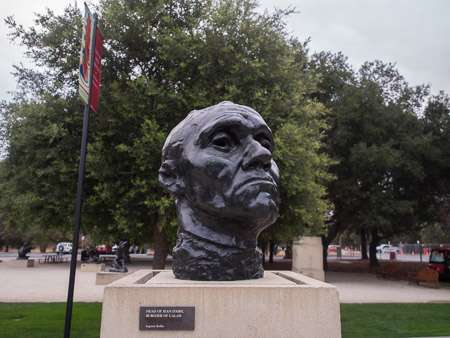

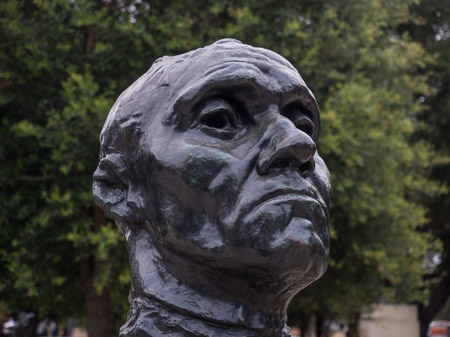

Figure 2 is similar to what an iPhone 6S would take at that distance. Figure 3 was taken the exact same distance away, but at 56mm. The telephoto framing is clearly better for a classic head and shoulders portrait. Perspective distortion on the head is acceptably low, and I was able to stand at a reasonable distance.

Figure 2

Head of Jean d’Are, Burgher of Calais. Photo captured at 28mm.

Figure 3

Same camera location as Figure 2. Photo captured at 56mm.

If you try to reproduce the same subject framing with the wide-angle lens there is quite a bit of perspective distortion ( figure 4 ). The portrait is a lot less flattering, and you have to get the camera very close. A lot more environment is also captured, there is less subject isolation. The telephoto camera captures roughly the center one quarter of the wide angle camera ( figure 5 ). Neither camera is a better or worse focal length, they are complimentary tools for taking different kinds of photos.

Figure 4

The same head framing as Figure 3, captured at 28mm.

Figure 5

What the telephoto lens would capture inside a wide-angle frame.

56mm: A Camera Odyssey

Previously the iPhone had a significant limitation as a camera. It was wide angle only. Some marvelous photos are taken with a wide lens; many are not. According to ExploreCams the most common focal length used to take photos with DSLRs is 50mm. The most commonly used prime lens in DSLR systems is a fast 50mm. This is not an accident. 50mm is a well-placed compromise between wide and narrow focal lengths. It's a very "human size" lens in terms of field of view, and it lets you isolate subjects effectively. Just as with 35mm in the first iPhone, it's no surprise that Apple picked something around 50mm for their longer lens.

Figure 6

Photo taken at 56mm. It would not have been possible to take this photo with a 28mm lens because it was unsafe to fall into the gorge.

Photography is an amalgamation of art and mechanics. While this new lens might only have twice the focal length, that artistic flexibility grants disproportionately superior results. Portraiture, as an example. People behave very differently when an object is shoved up into their face. Being able to take that portrait at 30 inches (76 cm) rather than 16 inches (40 cm) is huge for the natural expression of the model. Even more huge for candids, or shooting from a less invasive location at an event. This one seemingly minor change has a large effect on the flexibility offered by the camera.

Figure 7

Photo taken at 50mm. It would not have been possible to take this photo with a 28mm lens because perspective distortion would have ruined the lines of the car. Reproduced in Display P3 gamut.

Technical Limitations

While the telephoto lens unlocks tremendous potential, there are also areas it will perform less well than its wide angle colleague. Mainly, low light performance will be notably worse. To package this lens, it is possible that Apple had to include a smaller sensor or a telephoto group. Additionally they had to reduce the aperture to ƒ/2.8, so it lets in less than half the light compared to the ƒ/1.8 wide angle lens.

iPhones are not the most ergonomic or stable camera platform, and the increased focal length will exaggerate that. Keeping shake-induced blur to a minimum requires the shutter be faster at all times. A trick used by the wide angle lens in low light is to stabilize the optics, and leave the shutter open as long as possible to gather the most light. That trick will be less effective with the telephoto lens, especially if it lacks stabilization.

Figure 8

Photo taken at 50mm. It would not have been possible to take this photo with a 28mm lens because getting too close would have occluded the important natural light. Reproduced in Display P3 gamut.

The Camera You Have

If you get an iPhone 7 Plus, don't just use the 2x mode when you want a little more zoom. Try it out for awhile. Think at fifty-six millimeters. Frame shots with it, isolate subjects with it, shoot parts of things rather than the whole thing, find interesting perspectives, fill the foreground, and so much more. I am excited because this puts a much more versatile photography tool into the hands of millions of people.

It might not be an ultra-fast, stabilized camera. It might not even be the same sensor. But it's good enough to change the way you think about phone photography. Which is really the point, and almost certainly part of the reason Apple did it. You have to care about photography to build this feature.

Figure 9

Photo taken at 56mm. Maybe you could have taken this with a 28mm lens. But it's so cute that I don't care.

Lenses

Despite "telephoto" sounding a bit funny for a lens that is only 56mm. It is technically possible and perhaps not just marketing. If the sensor is 1/3-inch it likely contains a telephoto group, and the focal length of the lens is very likely longer than the lens' physical length. Therefore it is, probably, actually a telephoto lens. The math doesn't quite work out for it to be a 1/3-inch sensor without altering the optical center. So either there is a telephoto group, or the sensor is smaller than 1/3-inch.

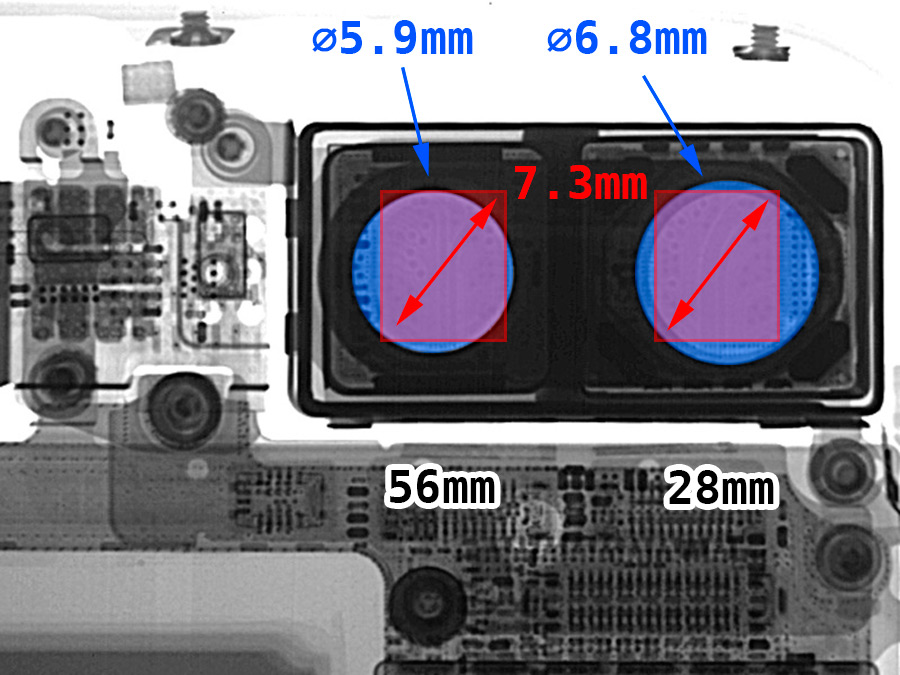

iFixit has once again done a great service by tearing down brand new hardware. Based on their iPhone 7 Plus teardown two things seem fairly clear.

- The sensor packages for both cameras appear to be the same size ( figure 11 )

- The 56mm lens is not stabilized. It does include a focusing voice coil (VCM) as usual, but no electromagnetic suspension system.

Figure 11

X-Ray view of the iPhone 7 Plus camera system with annotations. Note that the aperture of the housing is not indicative of the objective lens size or the effective lens aperture. Image sourced from iFixit.

I'm not clear on where the sensor effective area begins and ends in figure 11, but the outline of the package is consistent for both sensors. Therefore 7.3mm is almost certainly not the actual effective diagonal, and it's probably a 6mm sensor. EXIF reports the focal length of the telephoto lens to be 6.6mm, but the math says that the focal length on a 1/3-inch sensor should actually be 7.8mm (thicker than the phone).

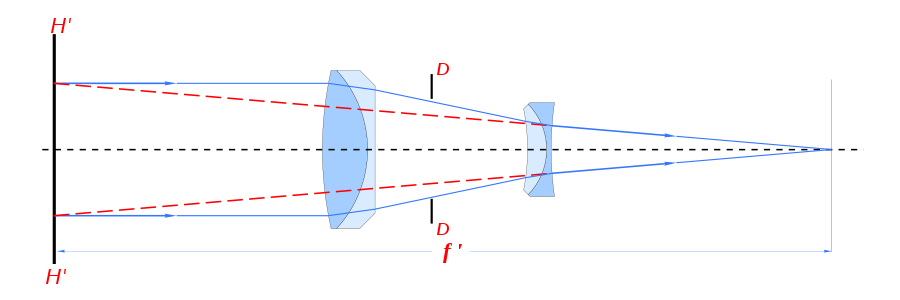

People initially assumed that this meant the sensor was smaller, which could be true. There is another possibility, you can design a multi-element lens. In its most basic form this uses a convex lens to converge incoming light rays. Before they converge, another lens group acts as a concave lens which causes the light rays to diverge to a straighter path again. This lets you build a lens that has a longer focal length than its physical size would generally support ( figure 12 ). When Apple repeatedly called the 56mm lens a "telephoto" lens, I was curious about that word choice. Everything I have said is speculation, we wait for actual measurements.

Figure 12

In this telephoto diagram, H' is the principal plane, and it lies outside the lens entirely. This makes for a much longer focal length. Image sourced from Wikipedia.

Depth of Field

I spent this whole time talking about the functional difference between two cameras. What else can you do with two cameras? You can depth map the environment. Which is what Apple is doing for their not-quite-ready Portrait mode. It will also be immensely important for Augmented Reality applications. There are some caveats to this process, such as edges of objects and reflective or transparent objects being difficult to describe. Hair is a good example of something that is difficult in stereo depth mapping. Apple is no doubt using secret sauce to approximate edges and refine the effect. What kinds of objects it can trace, and how effectively it does it, will be a fascinating study.

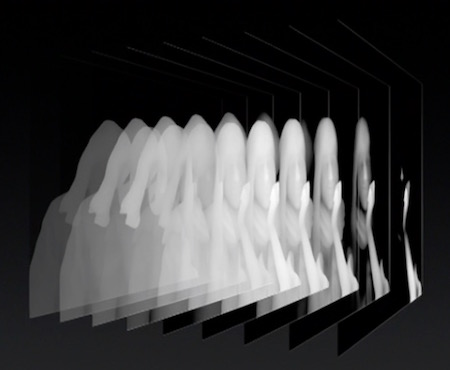

Figure 10

iPhone 7 Plus depth mapping feature demonstrated during Apple's keynote on September 7th, 2016.

There is a lot of concern about how authentic the "fake" DoF blur will look, and it could go either way. A simple implementation could be a Gaussian Blur effect applied crudely, which would indeed look mediocre. The other possibility is that it will look great. It is entirely possible that the depth map has enough granularity to understand faces accurately. That Apple is simulating an aperture with blades, and creating a circle of confusion approximation at various depths. How to simulate an optical system to produce great looking bokeh is entirely a known quantity. We see it with digital lenses all the time in cinema CG scenes. The only questions are: How good is the depth map output? How ambitious was Apple with their optical simulation?

Grab Bag

One team at Apple which has been hitting it out of the park for years, but getting little recognition, finally got some. The ISP team. They've been responsible for a lot of the magic that makes iPhone photos good for quite awhile now. Thumbs up, guys and gals.

iPhones now shoot in wide color. See my article on the subject. It's a bit unclear which iPhones inherit this feature with iOS 10. With the new RAW APIs it is probably possible to extract wide color from other iPhone cameras. RAW files don't inherently contain a color space, white balance, or many of the other attributes we tend to think of in digital imagery. It's a bunch of bits assembled by the ISP which has to be processed at display time. This means that RAW can and often does contain color and bit depth information outside of what your display can realize.

It would surprise me if Apple isn't using some kind of cross-camera mixing to increase the capability of both cameras. Such as when taking a portrait, use the wide angle lens to gather more luma samples from the face and improve photo quality within the ISP. Perhaps even just use it for noise control.

Head of Jean d’Are, and other sculptures by Auguste Rodin, are on display at Stanford University's Rodin Sculpture Garden.

For you folks on an extended gamut device, such as a new iPhone 7, iPad Pro, or iMac 5K. Figure 7 and figure 8 are reproduced in Display P3 gamut for your viewing enjoyment.